Testing

Welcome back to our blog! This week, we are dealing with several aspects concerning testing.

Testing becomes more and more important in today's way of development. In our project, we want to focus on three different test-types: UI testing, JUnit testing & UX testing.

UI testing is important in order to check if the game-UI works properly. Such tests have already been implemented and can be seen in our blog entry of week 5.

For our game engine, it makes sense to use JUnit for testing.

In late phases of development, we plan to run through some UX-tests so that we receive some feedback about the self-explanation aspect of our app.

The blog entry of this week mainly deals with JUnit testing.

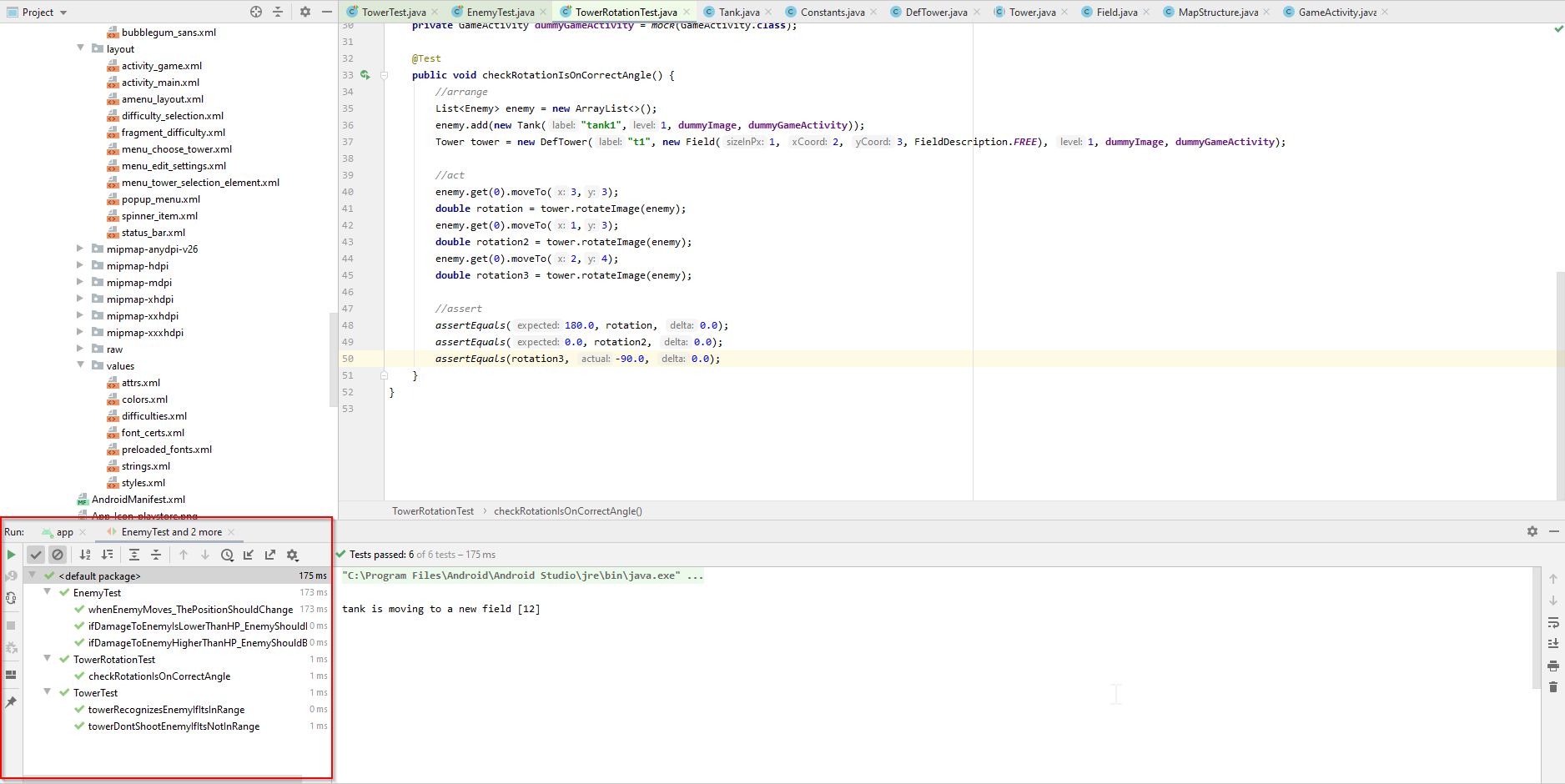

In our repository on GitHub, a folder called "test" exists (click to check out the folder). This folder contains some JUnit tests to check if our game engine is still working. From now, those tests will run and the build-process will start if we push some commits up to our GitHub repository. The test-results of each test-on-push are located in our Android-CI area. Moreover, there is a screenshot of our successfully runned JUnit tests below. Our build.gradle file shows the integration of our chosen test-libraries.

At the moment, it is not possible to create a Unit test which covers all aspects of a use case because of permanent connections to our UI. Nonetheless, we think that our Unit tests cover the most neccessary components of most use cases.

Furthermore, our test-plan document is also available on GitHub now.

New Feature: Choose Difficulties!

In the period of sprint 13, the use case "Choose Difficulties" has been developed.

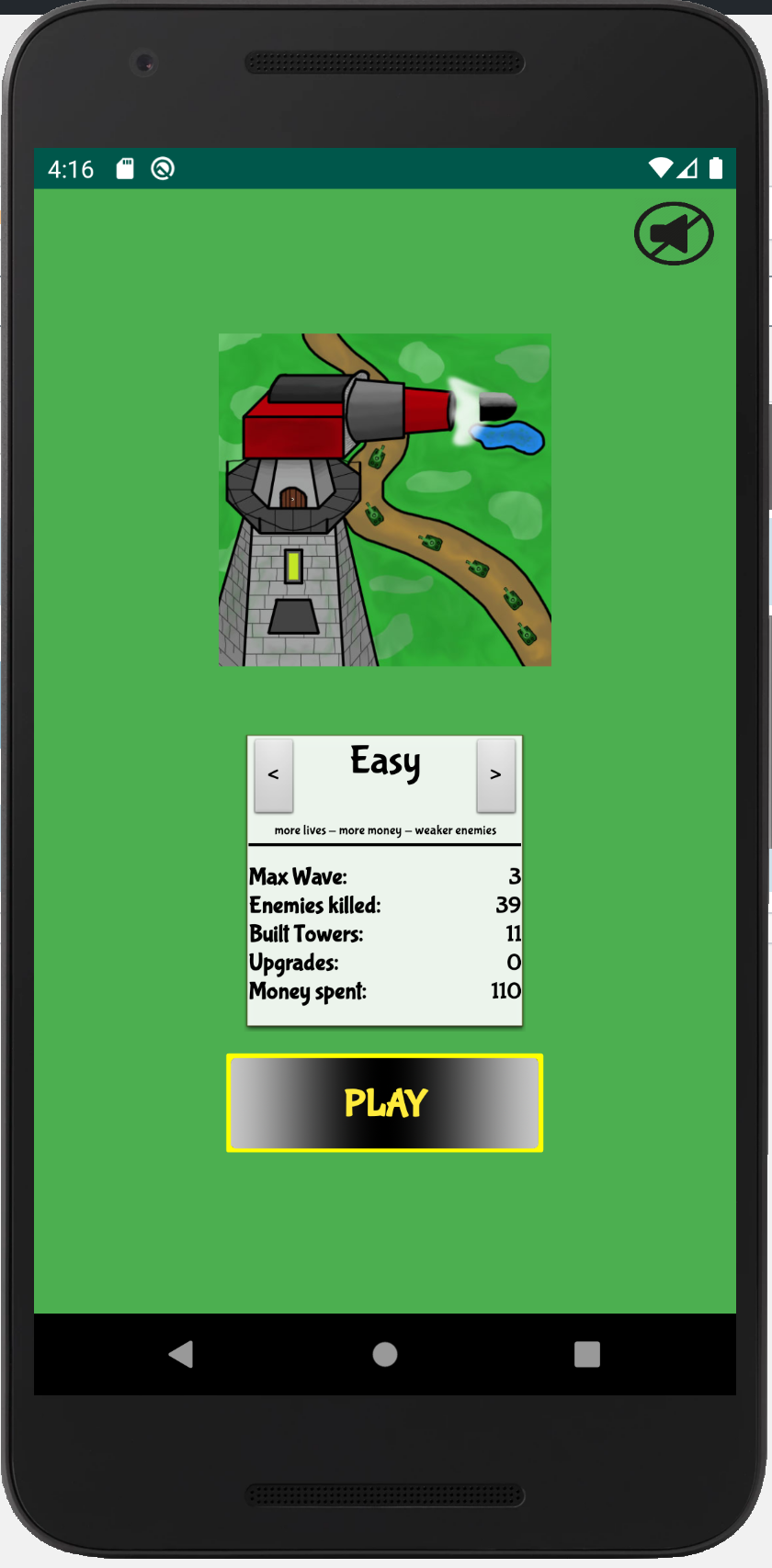

From now, a user is able to see a box on the main activity. In this box, three panels for three difficulties can be displayed: easy, medium and hard. If a user clicks on the play-button, the game will start with the currently chosen difficulty. Depending on the difficulty, the amount of start-money, the amount of lives and the composition of waves can differ.

Those panels were developed by making use of Fragments and a ViewPager. For each difficulty-panel, a fragment has been created and each fragment is embedded into the ViewPager which is a part of the main activity and which makes the box become visible.

In addition to the new difficulty feature, we implemented statistics into the game. After winning or losing a game, the values "Max Wave", "Enemies killed", "Built Towers", "Upgrades" and "Money spent" will be saved if the recent result is higher than the current high score. Those stats are displayed on the main activity in the difficulty-box as well, because the statistics are saved in dependence of the chosen difficulty.

The statistics become saved using the Android Shared Preferences. This functionality allows developers to save small amount of data into automatically created files by the key-value principle. In the future, we are going to make use of the Shared Preferences one more time when developing the game-settings.

Probably, the design we are going to change the design of the main page lateron.

New Feature: Select between different towers!

Also in the period of sprint 13, the use case "Select between different towers" has been developed.

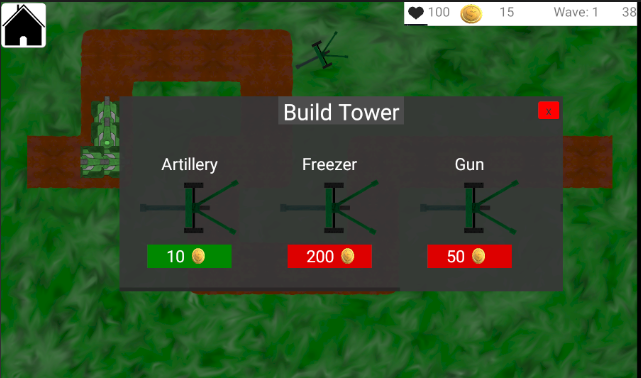

Since this Use Case is implemented, it is possible to open a popup window and build a selected tower onto a field.

To open the build-popup, it is necessary to double click onto a free field. Within this popup, users are able to view all implemented towers. For each tower, the name, its image and its price is shown. By clicking on the price, a tower will be built. Of course, building a tower is only possible when the player has enough money. The price field will be green if the player has enough money. Otherwise, such a price field will be red. When clicking on a red price-tag, nothing will happen. Otherwise, the popup closes and the tower will be built. If a player does not want to build a tower, it is possible to close the popup by clicking on the close button in the right top corner.

The popup window becomes generated by the use of two XML-files. One XML-file is used for creating the frame and the other one creates the content.

This allows us to use the first layout file for all popups we want to have because we planned to create two more popups for upgrading /selling towers and for changing the settings. Hence, this separation saves us some time in the future.

In the picture, you can see how this popup looks like right now. At the moment, all towers have the same image. The reason: Other towers are not implemented yet but they will be there very soon.

Conclusion

All in all, we are really happy with our progress. Even our project is not that handy to test components as actually required, we think to be able to manage adequate testing.

Moreover, we are looking forward to keep the speed of development up so that the main functionalities will be developed before the end of the semester. Probably, there will be another publication of the next use cases in the blog entry of next week.

Thanks for your interest and see you soon!

Hi Team Towerdefense,

great to hear about your progress in testing your game. Your JUnits tests look good although I can’t find the actual test runs in your GitHub actions, maybe you could link to one concrete example.

That you can’t test a whole use case with one JUnit test isn’t a problem to my mind as this would be the task of an integration test or something similar. Units tests should only test very small parts of your software, I belive. Testing more complex aspects will be handled by other tests.

Also your new features sound really good. I liked how you tried to give an exlpaination on how everything works together.

Looking forward to see more.

Yours,

Marlon from Clairvoyance

Hi Marlon,

thank you for the feedback!

I have to admit that the paragraph about unit testing does not highlight our main intention. In the grading-criteria, it says that we should choose one use case and write tests for each path in the corresponding activity diagram. This is actually what we are not able to cover yet because of several connections to the UI.

Concerning the testing in our GitHub actions, I have to agree that we do not have an optimal solution yet. Currently, we are using a third party action called „Android GitHub Action“. In the GitHub-CI area (e.g. https://github.com/niwa99/Tower-Defense/runs/662499930?check_suite_focus=true), this action becomes runned, but there is not any obvious reference to our Unit tests being runned. Looking into the plugin sources (https://github.com/Vukan-Markovic/Github-Android-Action/blob/master/.github/workflows/main.yml), it becomes more clear that the tests are runned.

I am really sorry for this laboriousness! Probably, we will switch to another tool like Travis lateron.

We will keep you up to date :)!

Kind reagrds,

Nicolas

Hi Towerdefens Team,

you did a nice job with your Unit-tests yet!

I really like how you use aliases for the test description, so everyone can see what a test does or what test fails. Even if he doesen’t know the code.

I know there are some difficulties with continous testing, but I’m looking forward that you will do your way.

Best regards and keep going

Nico

Hi Nico,

thanks for your feedback!

Hopefully, we can set up the CI completely according to our wishes and needs very soon.

Kind regards,

Nicolas

Hi Team Towerdefense,

your JUnit tests are looking really good. JUnit is a good tool and doesn’t create to much of a hassle.(at least in our experience ;D)

The tests are pretty detailed and give an good view about the future of your project.

We are looking forward to your results

Sincerely

PiPossible

Hi Team PiPossible,

thank you for your feedback!

We definitely agree that JUnit is a great tool for software engineering in general!

Kind regards,

Nicolas