Metrics - what is that?

Hi everyone and welcome back to our blog!

This week, we are dealing with metrics. For analyzing our metrics, we make use of an Android Studio plugin/tool called "Metrics-Reloaded". This plugin offers a lot of possibilities to analyze the metrics of a project.

In particular, we want to deal with three metrics this week: Cyclomatic Complexity (CYC), JUnit Test Metric and JavaDocs Coverage Metric. Explanations to those metrics and refactoring examples in our project follow below.

Cyclomatic Complexity

The cyclomatic complexity measures the complexity of code. For this, the code is structured into a flow graph diagram. In this diagram, edges, nodes and exit points are counted and calculated into the complexity value. On the website tutorialspoint.com, "What is Cyclomatic Complexity?" is explained very well.

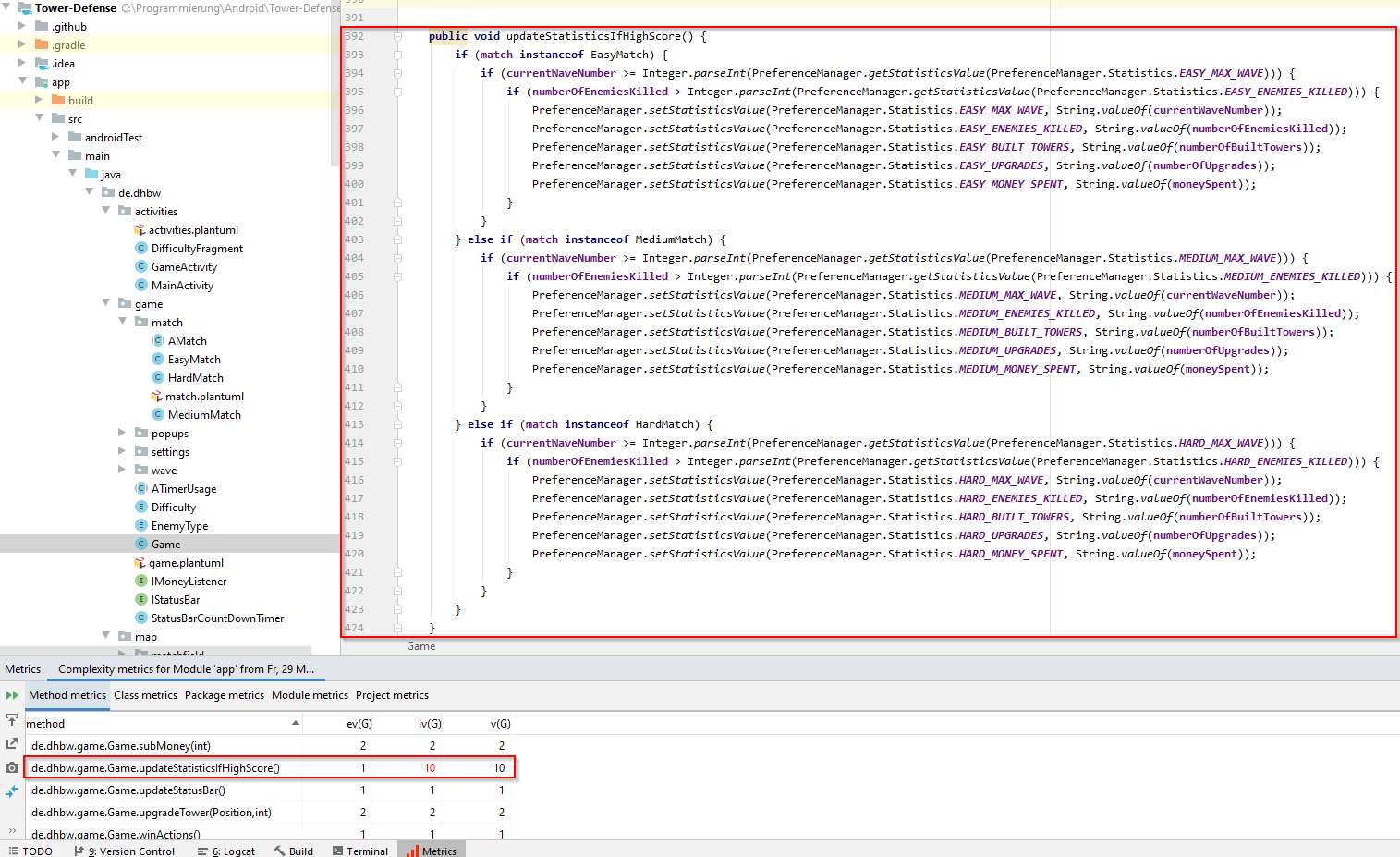

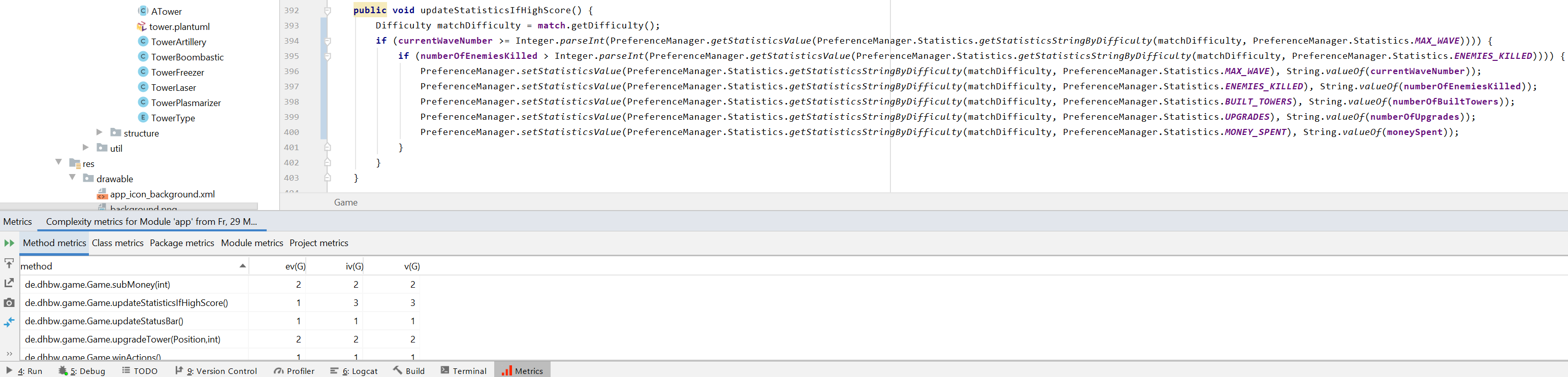

In our tower defense project, just a few methods with high complexity values exist. In the following, we refactored our method "Game.updateStatisticsIfHighScore()". Before refactoring, the method got a complexity value of 10. After refactoring, the complexity was reduced to 3.

(Open the screenshots in a new tab for better resolution.)

BEFORE Refactoring

AFTER Refactoring

The "updateStatisticsIfHighScore()" method will update the high score for the currently played game-difficulty if the scores of the current game are higher than the saved high scores.

Before refactoring, the difficulty of the match had to be determined by checking the type of the match using "instanceof". According to the defined difficulty, the new score got saved by the explicitly defined key (e.g. EASY_MAX_WAVES, MEDIUM_ENEMIES_KILLED, ...). In fact, always the same code was runned using different keys for saving the values.

After refactoring, we pass on the difficulty of a match as well as the required high score type to a method which generates the needed keys for saving the score values. This way, we are able to save up about two thirds of code when looking at the updateStatisticsIfHighScore method.

Probably, this solution still can be improved but it shows pretty well how the cyclomatic complexity can be reduced by a lot.

You can also check out our corresponding commit for all changes concerning this metric-refactoring.

JUnit Testing Metric

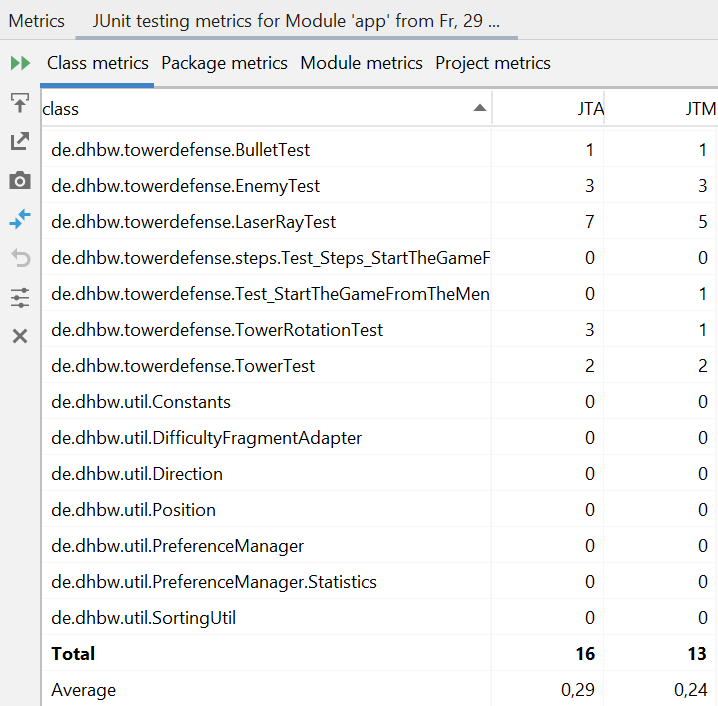

Another metric for analyzing one's code is called "JUnit Testing Metric". As the name already states, numbers concerning JUnit tests are used in order to calculate values.

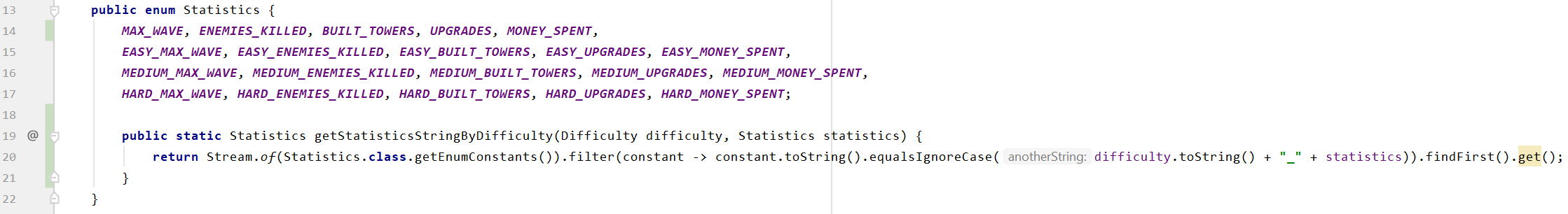

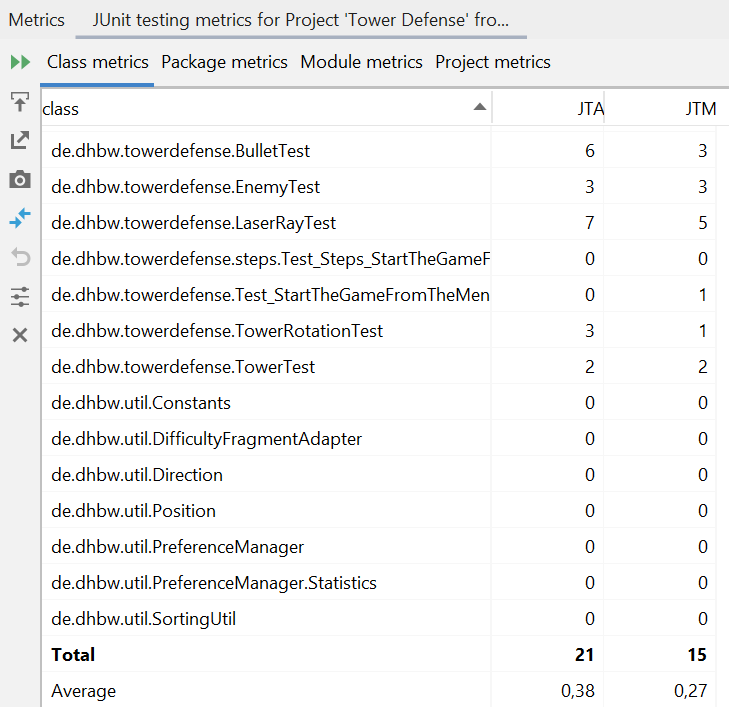

As an example, our plugin offers two specific values per class: "Number of JUnit test assertions" (JTA) and "Number of JUnit test methods" (JTM). Beside the total amount of both measurements, the tool provides some average values so that these values help to track the density of testing.

Up to now, we got a JTA of 16 (total) or 0.29 (average) and a JTM of 13 (total) or 0.24 (total). A before/after comparison of some refactoring and addition of tests can be viewed below.

BEFORE Refactoring

AFTER Refactoring

In particular, we created some more JUnit tests in order to cover most implementations of our tower-shooting-functionalities.

As a result, both values increased so that we got a JTA of 21 (total) or 0.38 (average) and a JTM of 15 (total) or 0.27 (average) now.

The commits add-JUnit-Test-Bomb and add-JUnit-Test-SnowFlake contain the recent changes.

Resulting, one can say that the higher those values, the better the test coverage and the metric values.

JavaDocs Coverage Metric

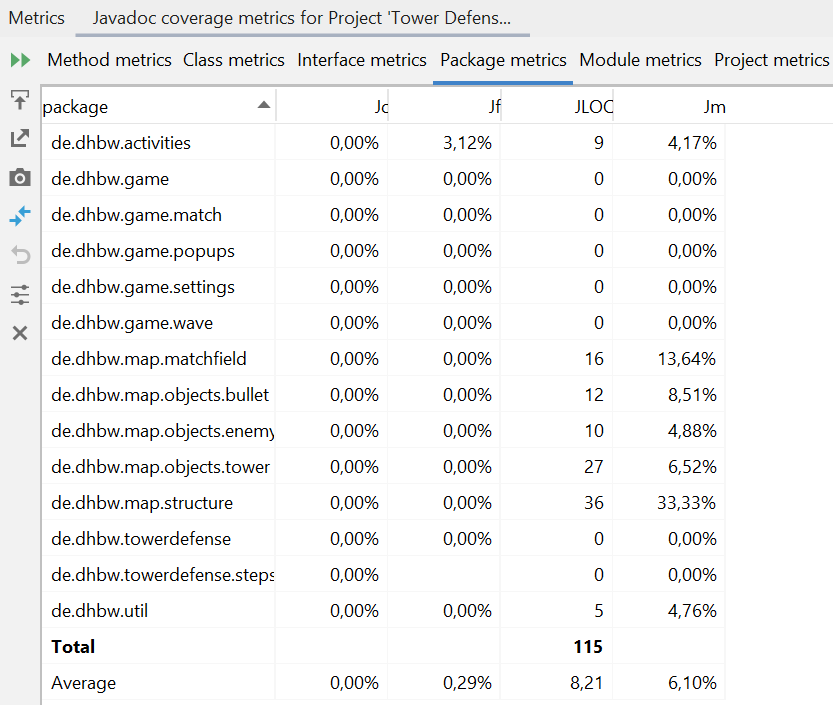

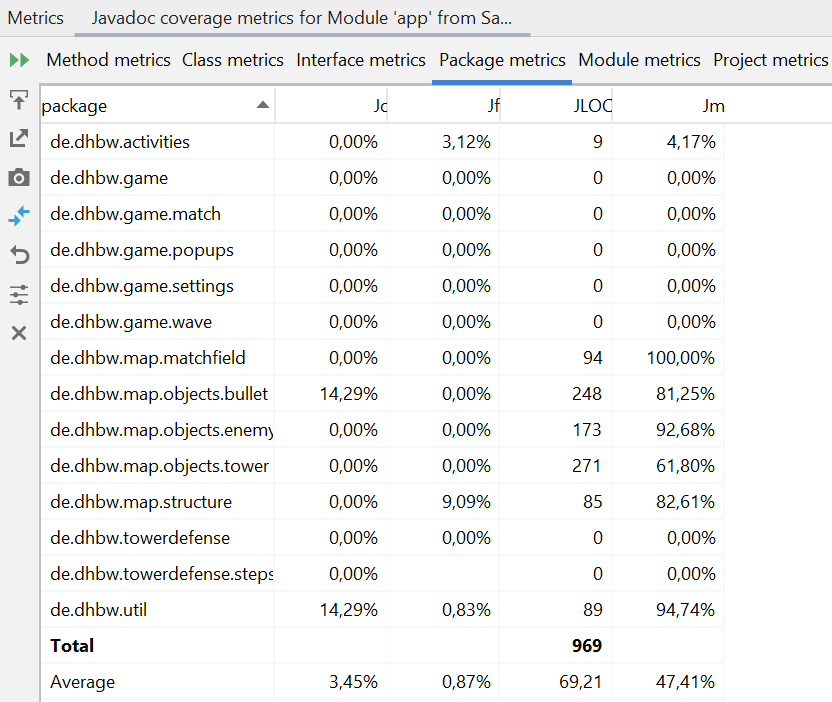

As a third metric, we want to introduce into the JavaDocs Coverage Metric. This metric is a very simple one and points out which parts of code still need some documentation. Those values can be generated in total as well as in percentage (see screenshots below).

In our project, we strictly followed some rules of clean code from the beginning and named methods most often meaningfully. Because of that, JavaDocs were redundant. In order to show to you how this metric works, you can find a comparison of before/after code below.

BEFORE Refactoring

AFTER Refactoring

The corresponding commit can be viewed on GitHub as well.

As one can see, our average Javadoc coverage (right column) increased from 6.1% to 47.41% while adding JavaDocs for about the half of all methods/packages.

In the metric overview, it is pretty useful to see what packages/classes/methods/interfaces still need some JavaDocs.

All in all, this metric is not that important in our case but probably, it is a nice example to demonstrate because of its easy way of increasing quality.

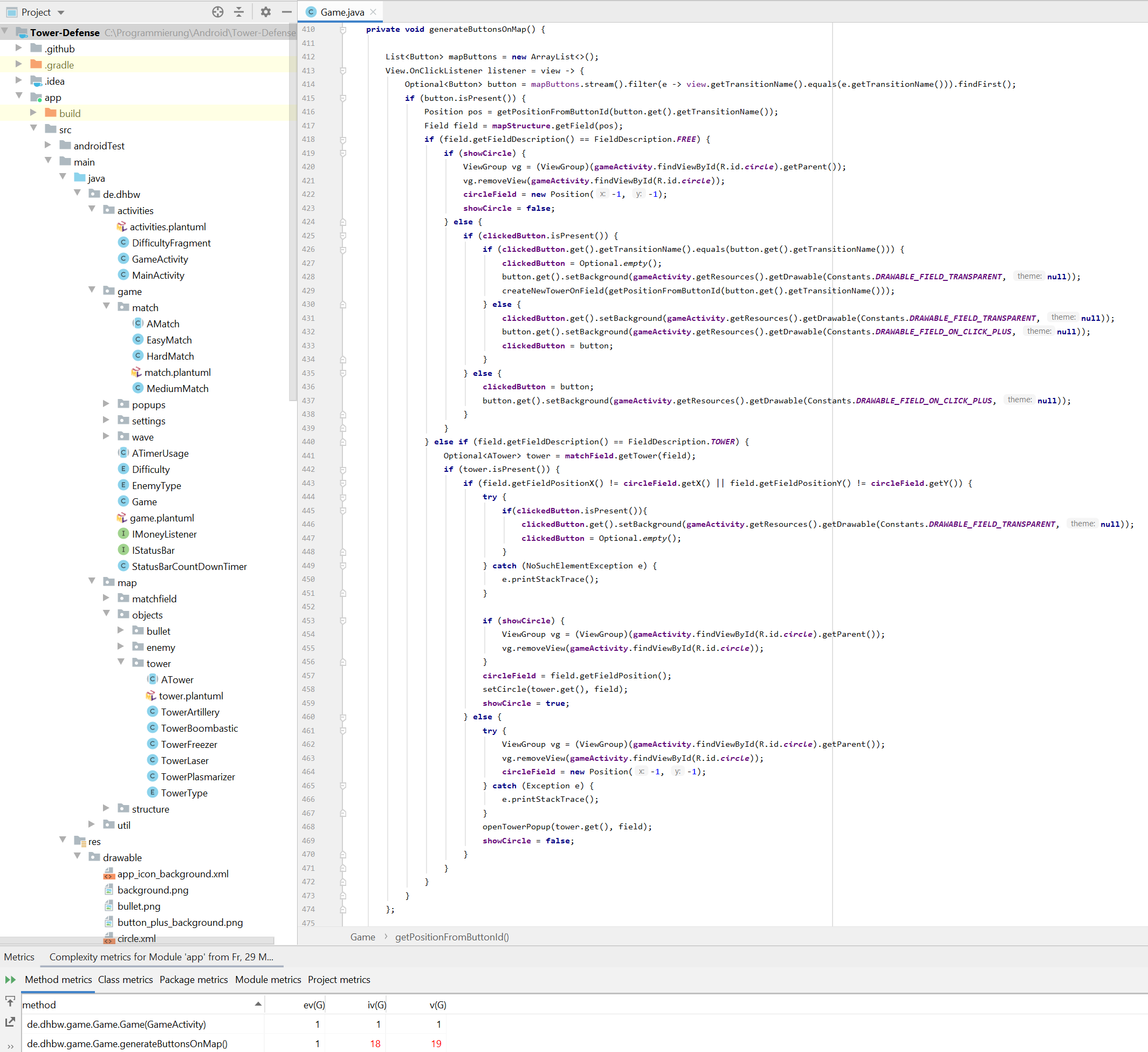

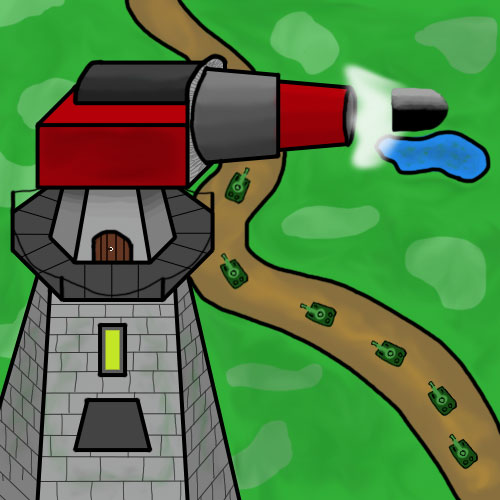

Code which is not going to be refactored

Above, you can see our example of code which is not going to be refactored in order to reduce its complexity value of 19.

The "generateButtonsOnMap()" method is responsible for generating the field structure. It is one of our most fundamental game engine methods which is never going to be touched anymore because the time needed for refactoring the method is disproportionate to the profit. The method works fine and we have not seen any bugs in the past.

Metrics - Conclusion

All in all, we can summarize that metrics can help to find weak code spots in general. On the other hand, code snippets highlighted by the tool need to be analyzed by a developer first in order to decide whether the metrics should be improved or not.

In our case, the metrics analysis is only performed locally because most common metrics do not fit very well into the style of programming in the Android framework. As we do have our own conventions which are adapted to programming Android apps, this decision is okay in our opinion.

Big Progress in our CI/CD

Beside the use of metrics, a good CI/CD (Continuous Integration / Continuous Delivery) pipeline becomes more and more important.

Until now, our CI was not really good. Instead of setting up a good CI tool (such as Jenkins or Travis), we used the GitHub Actions functionality where we have set up an Android CI with a third party tool. Though the tool built and tested the tower defense project, it acted as a black box and nobody was able to have a closer look into what is going on there. Of course, we could have done this better earlier, but as our team wanted to push ahead the progress of the tower defense game first and as nobody of us had any experience with CI/CD tools, the previous solution was obviously the best one for us. But now, this changed!

Introduction into our use of TravisCI

TravisCI is one of the most popular tools when talking about CI/CD. This is actually the reason for why we decided on using this tool from now.

After a lot of hours dealing with getting Travis to build our Android application, we finally managed to have an CI which does the following:

- Clean and build the Android project

- Run all JUnit tests (instrumentation tests do not work out properly with our required SDK version)

- Generate an apk file (Android installation file)

- Send a push notification to our discord of build/test status for a better overview and an active flow of information

- deploy the app to our release section on GitHub so that one can download the app to one's own Android device and test it in reality

As you might imagine, this is a big step for us and enables a lot of possibilities in the future (e.g. automatic deployment of the app to Google Play Store).

Of course, all those new information are added in our testplan document on GitHub which also contains a badge with the current build-status of our app such as the following:

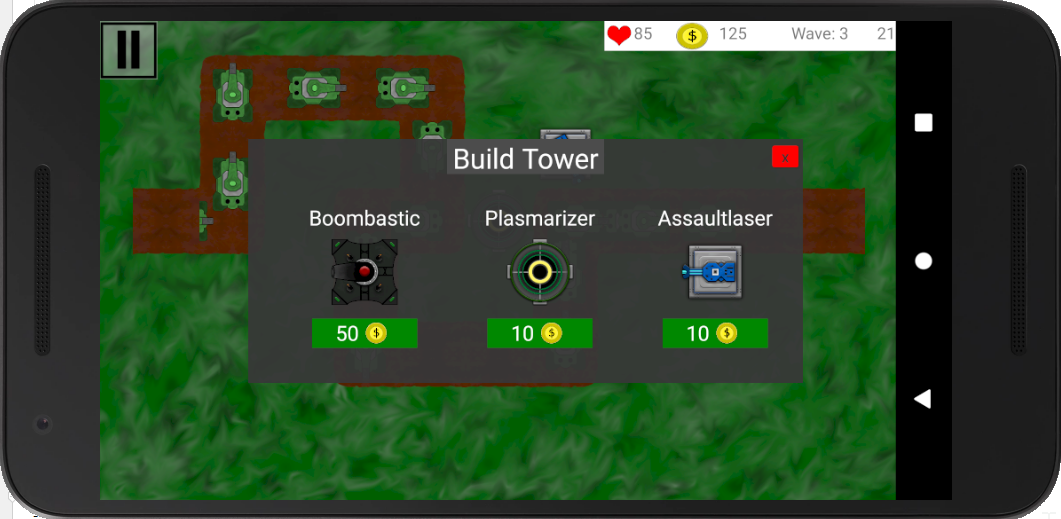

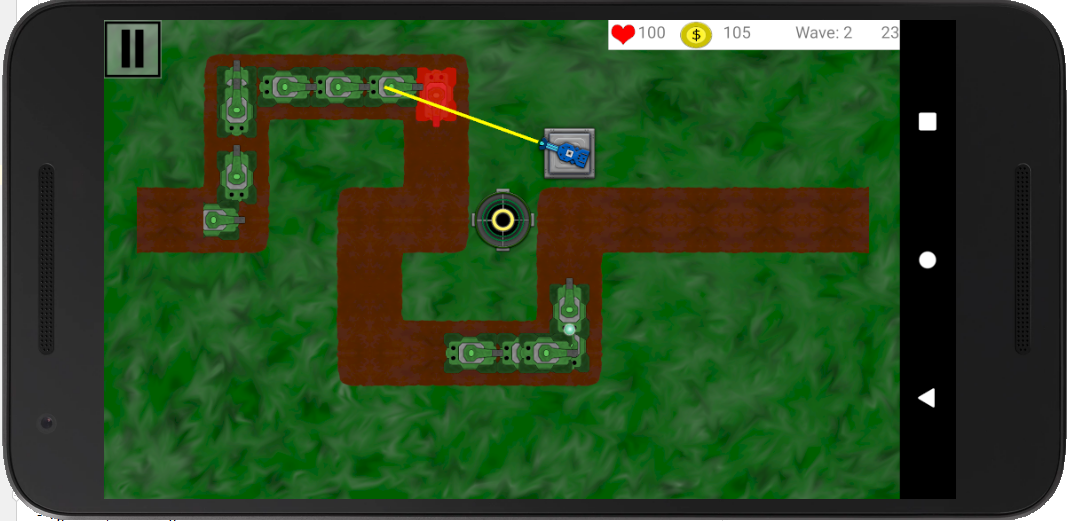

New Tower: Plasmarizer

A recently added tower is called Plasmarizer. The Plasmarizer is a tower with a special shooting mechanism. It shoots a PlasmaBall onto the nearest targetable enemy in range. As soon as the PlasmaBall hits the targeted enemy, the bullet can jump to other enemies in a specific range. With each further hit enemy, the damage of the ball decreases.

New Tower: Assaultlaser

Another new tower is called Assaultlaser. This tower does not shoot a typical bullet as all other towers. As the name already says, the Assaultlaser creates a LaserRay. The LaserRay focuses the nearest enemy in range and shoots as long as the targeted enemy is damaged by the LaserRay. If the enemy moves our of the LaserRay or if it becomes killed, the AssaultLaser will stop shooting and searches for the next enemy. If not explicitly targeted enemies move into the LaserRay, those enemies will also be damaged.

To be honest, this tower can become very strong when positioning the tower cleverly. Due to this fact, the game balancing (which will come up very soon) needs to be done carefully.

Because pictures cannot demonstrate the whole progress up to now, we uploaded our current app to appetize.io so that you can play by yourself, if you want. Here is the link: https://appetize.io/app/w4nbd746n40k2wajrfzp03vpuc

Summary

All in all, we are happy again to present so many new stuff to you. Beside the progress in the game itself, we managed to make use of metrics and we set up a well working CI. In fact, this means that most requirements of a good software engineering project are fulfilled and we can focus on the following tasks from now:

- game balancing

- implementation of some details

- optimization

- usability tests

- getting the project ready for the final presentation

That's it for now. See you next week!

Hello Team Tower Defense,

I really enjoyed reading your blog entry. It was well structured and clear which metrics your chose, how you implemented these changes and why.

You also explained a spot in your code where metrics suggest that you should implement a change, but you decided not to. All in all, well done. I think you fulfilled the grading criteria.

Your progress in your CI/CD, is an important step and it is great that you got it to work even though none of you has experience with setting CI/CD up. You mentioned the google play store. Do you plan to publish your app once it is done?

It was interesting to read about your future plans. I look forward to reading your next blog.

Kind regards,

bookly

Hi Team bookly,

thank you for the extensive feedback!

At the moment, our plan is to publish our app in the Google Play Store after the provisional end of development (in a few weeks).

We will keep you up to date :)!

Kind reagrds,

Team Tower Defense

Hi there, in short we do not have anything to complain.

You mention two metrics and explain them very well afterwards,

including the improvements you guys have gained due raising these.

– Team cozy

Hi Team cozy,

thanks for your feedback concerning the use of metrics!

Kind regards,

Team Tower Defense